Validate a Startup Idea Before Development: 5 Experiments That Work

Many startups fail not because of bad execution, but because they build products nobody truly needs. For non-technical founders especially, validating an idea before development is the most cost-effective decision they can make. This guide explains five practical experiments that actually work in early-stage validation — without writing code or hiring a full dev team. Each experiment helps reduce risk, clarify demand, and inform smarter MVP decisions.

TL;DR: You don’t validate a startup idea by building an MVP and hoping users show up. You validate it by testing demand, behavior, and willingness to pay before development. These five experiments help founders learn fast, spend less, and build with confidence.

Why validation must come before development

Development is expensive — even for a small MVP.

When founders skip validation, they risk:

- building the wrong features

- targeting the wrong audience

- running out of budget before learning anything meaningful

Validation flips the order: learn first, build second.

If you’re still shaping your concept, “I Have a Startup Idea but No Developer: What to Do Next” is a helpful starting point.

Experiment 1: Problem interviews

The fastest validation tool is conversation.

Instead of pitching your idea, ask:

- how people solve this problem today

- what frustrates them most

- what they’ve already tried

Look for patterns, not compliments.

If no one describes the problem in their own words, that’s a red flag.

To avoid common early mistakes, “MVP Development for Non-Technical Founders: Common Mistakes” explains where interviews often go wrong.

Experiment 2: Landing page with a clear value proposition

A simple landing page tests interest without building the product.

Effective pages focus on:

- one clear problem

- one specific outcome

- one action (signup, waitlist, demo request)

Traffic + conversion = signal.

If nobody signs up, the problem or message isn’t strong enough.

For founders planning a lean first version, “Pre-Seed MVP Development for Unfunded Startups on a Budget” explains how to avoid premature build costs.

Experiment 3: Fake-door features

Fake-door tests measure intent.

You present a feature as if it exists and track clicks or requests.

This helps answer:

- which features users care about

- which ones can wait

- what’s actually worth building

This experiment is especially useful when prioritizing scope.

For deeper guidance, “How to Prioritize Features When You’re Bootstrapping Your Startup” provides a practical framework.

Experiment 4: Concierge or manual MVP

Instead of building automation, do things manually.

Examples include:

- manual onboarding

- spreadsheets instead of dashboards

- human workflows instead of algorithms

If users won’t engage when it’s manual, automation won’t save the idea.

When moving toward a real MVP, “MVP Development Services for Startups: What’s Actually Included” clarifies what should be automated and what shouldn’t.

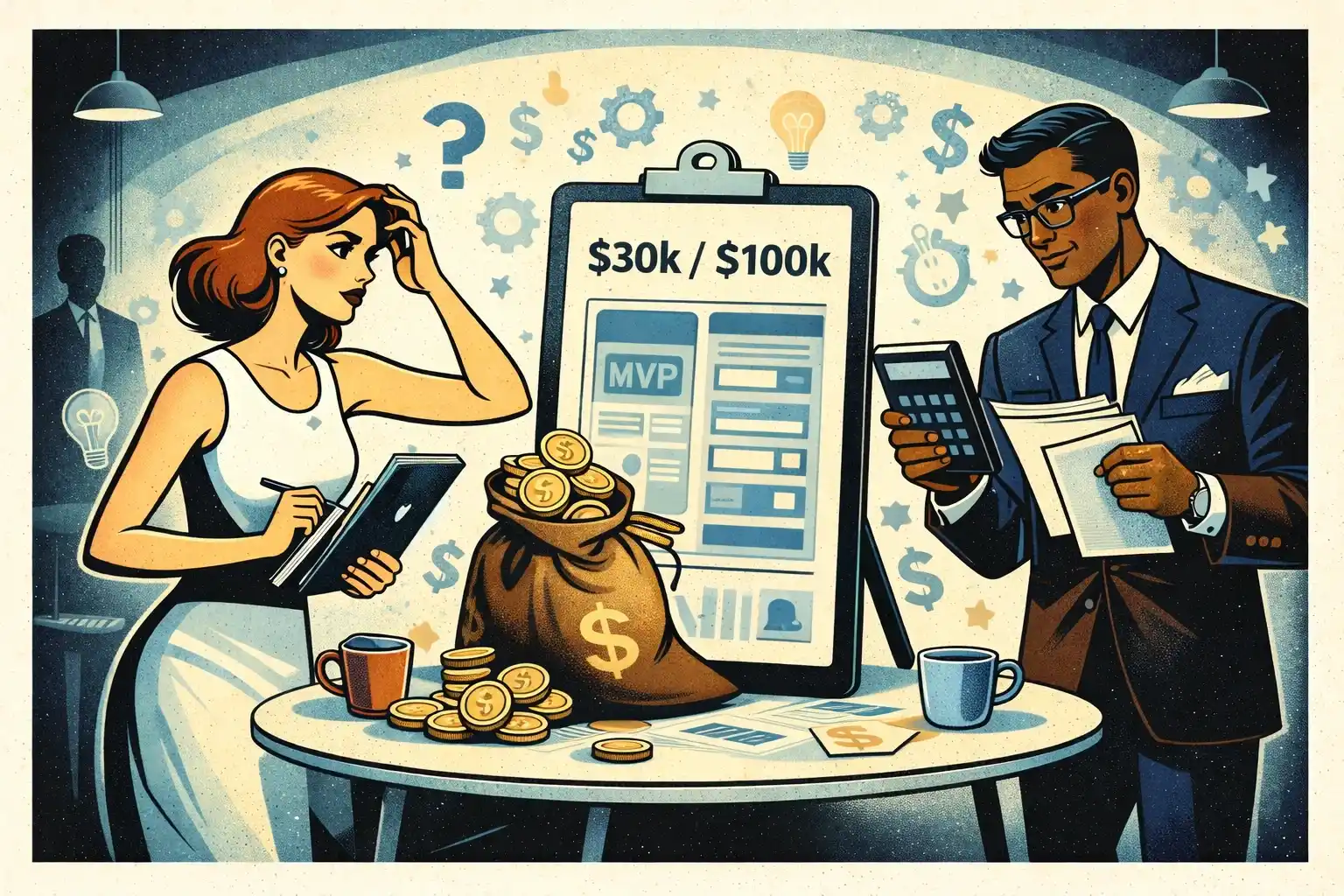

Experiment 5: Pricing and willingness-to-pay tests

Interest without payment is weak validation.

Early pricing tests can include:

- asking directly what users would pay

- offering paid early access

- presenting pricing on a landing page

You’re testing value, not revenue scale.

For realistic expectations, “MVP Development Cost Breakdown for Early-Stage Startups” helps align pricing with development effort.

How to interpret validation signals

Strong signals look like:

- repeated problem descriptions

- users asking when they can try it

- willingness to commit time or money

Weak signals include:

- vague interest

- “nice idea” feedback

- no follow-up actions

Validation is about behavior, not opinions.

When to move from validation to development

You’re ready to build when:

- the problem is clear and frequent

- the target user is well-defined

- at least one experiment shows real traction

At that point, development becomes a calculated risk — not a blind bet.

If you’re planning execution, “Web Development for Non-Technical Founders: A Step-by-Step Guide” outlines the next phase clearly.

Final takeaway

Great startups don’t start with code.

They start with evidence.

By running these five experiments, you dramatically increase your odds of building something people actually want — before spending months on development.

Validated interest but unsure how to turn it into an MVP?

At Valtorian, we help founders translate validation insights into lean, buildable MVPs — without overengineering or wasted budget.

Book a call with Diana

Get clarity on scope, experiments, and next steps.

FAQ — Startup Idea Validation

Do I really need validation before building?

Yes. Validation reduces risk and saves budget.

Can validation replace an MVP?

No — but it determines what your MVP should be.

How long should validation take?

Usually 2–4 weeks.

What if validation results are mixed?

Refine the idea and rerun experiments.

Do I need developers for validation?

No. Most experiments require no code.

Is a landing page enough validation?

It’s a strong signal, but better combined with interviews.

What’s the biggest validation mistake?

Confusing interest with commitment.

.webp)

.webp)

.webp)

.webp)

.webp)