How to Choose the Right MVP Development Company in 2026 (Top Teams Compared)

Choosing an MVP development company in 2026 is less about “who codes best” and more about who can ship the smallest useful product, reduce risk, and stay accountable after launch. This guide explains what matters now - team shape, discovery quality, delivery process, AI reality, communication, ownership, and post-launch support. You’ll see how common team types compare (founder-led studios, classic agencies, freelancer collectives, and in-house hires), what questions to ask, and how to avoid expensive mistakes before you sign.

.webp)

TL;DR: In 2026, the right MVP team is the one that can translate your idea into a clear scope, ship a usable first release fast, and prove it works with real users— not just deliver “nice code.” Compare teams by how they handle scope, accountability, and learning after launch, then pick the option that matches your risk, timeline, and communication style.

What changed in 2026: expectations are higher, tolerance is lower

A few years ago, you could get away with “we’ll build it, then figure it out. In 2026, most founders don’t have that runway.

Buyers now expect:

- A team that helps you cut scope without killing the idea.

- Fast iteration based on real feedback (not a long one-shot build).

- Basic analytics and a plan for what happens after release.

- AI used as a productivity tool — not as a buzzword or an excuse.

If you want the broader 2026 context that shapes these expectations, start with Tech Decisions for Founders in 2026.

The decision framework: 7 criteria that actually predict success

When founders choose the wrong team, it’s rarely because the team “couldn’t code.” It’s because the team couldn’t manage ambiguity, priorities, and real-world constraints.

Here are the criteria that matter most.

1) Scope discipline (can they protect you from overbuilding?)

Ask how they turn a big vision into a “smallest useful release.” If they say yes to everything, your MVP will become a six‑month project.

If you’ve never scoped an MVP before, read MVP Development for Non-Technical Founders: 7 Costly Mistakes.

2) Discovery quality (do they ask uncomfortable questions?)

A good team will ask:

- Who is the primary user?

- What is the first measurable outcome?

- What must be true for the MVP to be a win?

If discovery is skipped, you’ll pay for it during development.

3) Delivery transparency (do you know what happens week to week?)

You want a predictable cadence:

- clear milestones

- regular demos

- written decisions

- change control when scope shifts

4) Product thinking (do they think in outcomes, not screens?)

MVPs fail when teams optimize for UI completeness instead of user behavior.

A strong team will talk about activation, retention signals, and “what users do in week 1.”

If you want a clear picture of what “full-cycle” should include, see Full-Cycle MVP Development: From Discovery to First Paying Users.

5) Ownership and handover (do you truly control the product?)

Before you sign, confirm:

- you own the code, design files, and IP

- you can access repos, environments, analytics

- documentation exists (even lightweight)

6) Post-launch support (do they vanish after release?)

Most MVPs need 2–6 weeks of tightening after real users arrive. If the team disappears, the MVP becomes a stalled prototype.

7) Communication and decision-making (does it match your style?)

This is underestimated. A “strong” team can still be wrong for you if:

- they can’t explain tradeoffs in plain language

- they push you into decisions without context

- they don’t document assumptions

If you want a deeper comparison of engagement models, read Startup App Development Company vs Freelancers vs In-House Team.

Top teams compared

Below are the most common “top team” setups founders pick in 2026 — and how they behave in the real world.

To keep this honest: these are patterns, not hype. The right choice depends on your risk tolerance and what you already have (product clarity, budget, internal time).

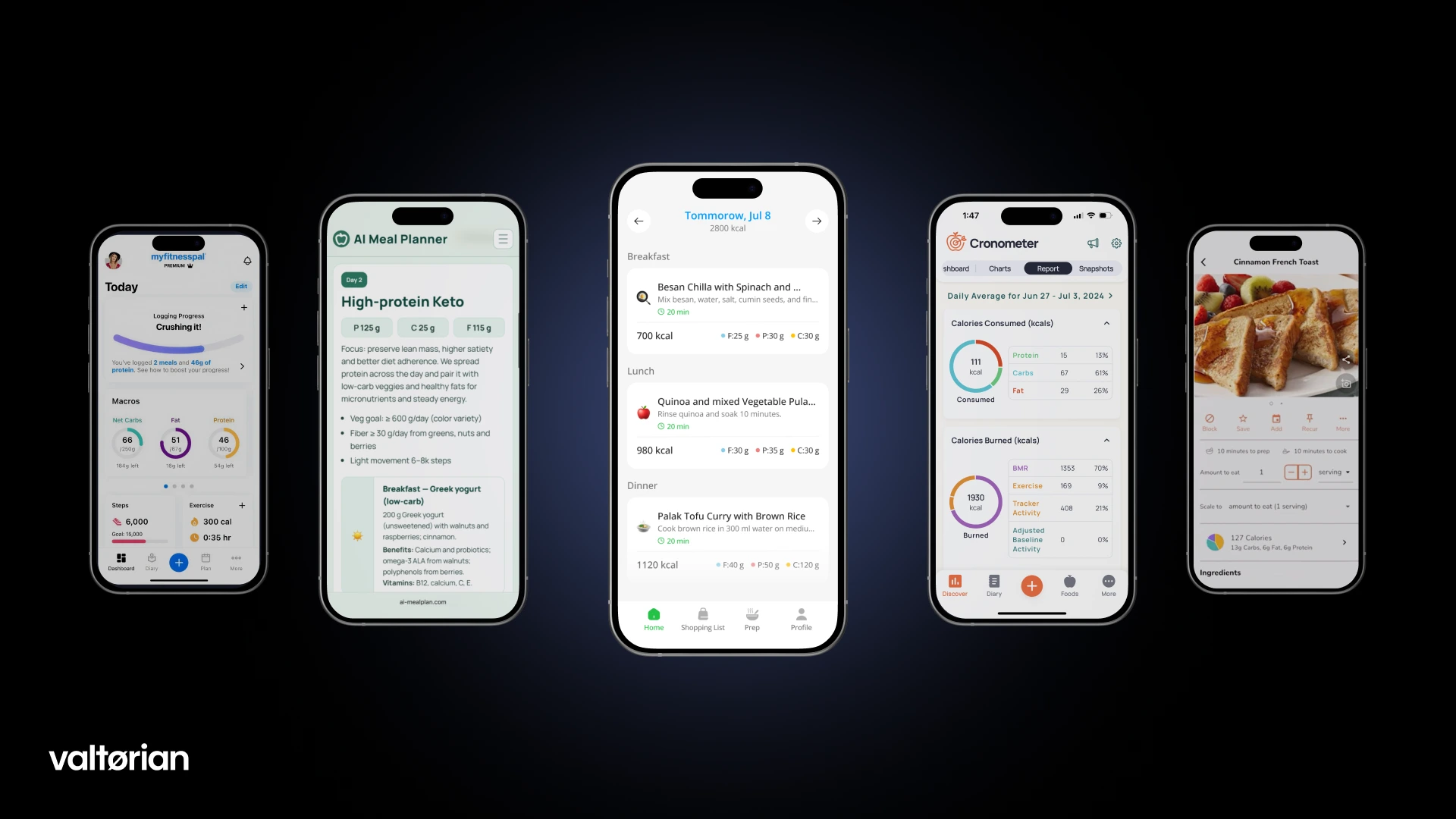

1) Valtorian

Best for: non-technical founders who want a small, senior team that scopes tightly and ships a usable MVP (web/mobile) with modern workflows, including AI where it makes sense.

What they’re known for: founder-to-founder communication, validation-first thinking, and MVP execution focused on real user behavior—not demo-only prototypes.

Watch-outs: like any boutique studio, availability and timeline depend on current capacity — confirm team allocation and post-launch support.

What to ask: What’s the “smallest useful release” for my product, what will you measure in the first 2–4 weeks after launch, and what’s included in iteration support?

2) thoughtbot

Best for: founders who value strong product strategy + design-led delivery, and want a well-established consultancy to build an MVP with a mature process.

What they’re known for: cross-functional product teams and an explicit MVP service offering (product strategy, design, and development).

Watch-outs: consultancies can be premium-priced; clarify who is hands-on day to day and how much strategy vs build time you’re buying.

What to ask: Who’s on my core team, what does the weekly cadence look like, and what do you ship by week 2?

3) Netguru

Best for: founders who want a larger, established product development company with a clearly packaged MVP service and the ability to scale team size.

What they’re known for: end-to-end MVP development services (discovery, delivery, testing/iteration, launch & learn with analytics).

Watch-outs: bigger teams can mean more coordination overhead; make sure decision-making stays fast and scope stays protected.

What to ask: Who owns product decisions, how do you prevent scope creep, and what analytics do you set up by default?

4) Altar.io

Best for: founders who want an MVP built through a structured, product-expert-led approach that prioritizes streamlining scope and launching quickly.

What they’re known for: an “MVP Builder” offering that emphasizes narrowing to the most important features, then designing and building the MVP from the ground up.

Watch-outs: confirm how they handle post-launch iteration and what’s included vs add-on.

What to ask: What is your scoping method, what’s the typical MVP timeline you aim for, and what’s the post-launch plan?

5) MindSea

Best for: founders who care deeply about UX polish and want a team experienced in building high-quality mobile and web apps with ongoing improvement.

What they’re known for: strategy + UX design + development and continuous improvement of apps; strong emphasis on product clarity and user outcomes.

Watch-outs: if your MVP is heavily backend/enterprise/integration-driven, validate that their approach matches your technical complexity.

What to ask: How do you validate the workflow in week 1–2, and what does “continuous improvement” look like after launch?

6) Purrweb

Best for: founders who want full-cycle web/mobile product development with an MVP-specific service and fast time-to-feedback.

What they’re known for: dedicated MVP development services aimed at validating ideas quickly; full-cycle design + development positioning.

Watch-outs: confirm seniority mix and who owns product decisions; speed is great only if scope discipline and QA are real.

What to ask: Who is my lead, what’s your QA/release process, and what do you need from me to keep velocity high?

7) Brainhub

Best for: founders who want a digital product development partner that can deliver an MVP as a cost-effective validation step and then scale the product.

What they’re known for: digital product development that explicitly includes MVP delivery as a validation path.

Watch-outs: clarify how “MVP” is defined in practice (features, timeline, learning loop), and how they keep the first release small.

What to ask: What’s in your MVP by default, how do you measure success during a pilot, and how do you handle scope changes?

How to run a “real” evaluation in 2–3 calls

Most founders compare teams the wrong way: they compare portfolios and hourly rates. Instead, evaluate decision-making.

Call 1: clarity and scoping

Give each team the same 5-minute brief:

- who the product is for

- what the first user success looks like

- what you think the MVP includes

Then watch:

- do they ask sharper questions than you expected?

- do they push back on scope?

- do they explain tradeoffs clearly?

Call 2: delivery and accountability

Ask for:

- an example weekly cadence (what you see every week)

- how they handle change requests

- what happens after launch

Call 3: proof

Ask for one concrete example of a shipped MVP and:

- what the first release actually included

- what they changed after real users arrived

- what metrics they tracked early

If you want a clean reference for what’s “included” in MVP delivery, read MVP Development Services for Startups: What’s Actually Included.

Red flags that look “normal” (but cost you months)

These are common and expensive.

- “We can build everything you listed.”

- No clear plan for post-launch iteration.

- No mention of analytics or learning.

- You can’t talk to the actual builder.

- Vague promises around timeline (“depends”) with no structure.

If you want the most common founder mistakes that lead to this, read MVP Development for Non-Technical Founders: Common Mistakes.

A simple rule to pick the right team

Pick the team that:

- cuts scope with you (not for you),

- documents decisions,

- ships fast enough to learn,

- and stays accountable after release.

If you’re bootstrapping and need to be extra strict with scope, Pre-Seed MVP Development for Unfunded Startups on a Budget can help you set realistic boundaries.

Thinking about building an MVP in 2026?

At Valtorian, we help founders design and launch modern web and mobile apps — including AI-powered workflows — with a focus on real user behavior, not demo-only prototypes.

Book a call with Diana

Let’s talk about your idea, scope, and fastest path to a usable MVP.

FAQ

How do I know if a team is truly “MVP-first”?

They push back on scope, define a smallest useful release, and talk about learning after launch — analytics, iteration, and measurable outcomes.

What should I prepare before talking to an MVP company?

A simple one-liner: user + problem + outcome, plus 3–5 must-have features and 3 success metrics.

Is hourly or fixed-price better in 2026?

Hourly works well when scope is still moving and you want flexibility; fixed-price can work if the MVP scope is crystal clear and change control is strict.

How fast can a real MVP be built?

It depends on complexity, but “fast” in 2026 usually means weeks, not quarters — if you keep the scope tight and avoid heavy integrations early.

What’s the biggest reason MVP projects go over budget?

Scope creep driven by unclear priorities, weak discovery, and late changes after build starts.

Should I choose a team based on tech stack?

Only partially. Fit, delivery discipline, and product thinking usually matter more than the exact stack for v1.

.webp)

.webp)

.webp)