Estimating MVP Scope in 2026: A Simple Method

Most MVP estimates break because founders estimate “features,” not the user outcome. In 2026 you can ship faster, but scope still balloons when requirements aren’t tied to one repeatable value loop. This guide gives you a simple method to estimate scope without technical jargon: define the core loop, translate it into user stories, size them with a lightweight system, and add the missing “hidden work” that always appears (QA, edge cases, analytics, deployment). You’ll leave with a scope you can defend and a budget you can trust.

TL;DR: To estimate MVP scope, stop listing features and start mapping one repeatable user loop.Turn that loop into a short set of “must-have” stories, size them in simple buckets, and add a realistic buffer for the unglamorous work.

Why MVP scope estimates fail (even in 2026)

AI tools and faster frameworks changed speed. They did not change the physics of scope.

Most early estimates fail for the same reasons:

- You’re estimating a wishlist, not a loop. A list of features doesn’t tell you what the user must be able to complete.

- You’re missing hidden work. QA, error handling, empty states, analytics, deployment, and “how do we handle weird cases?” always show up.

- You’re estimating the “happy path” only. Real users don’t behave like your demo.

- You’re mixing Prototype thinking with MVP thinking. A prototype proves the idea can be shown; an MVP proves the idea can be used.

If you’re still deciding whether you should build a prototype first, read Prototype vs MVP in 2026: When to Start Building.

The simple method: Loop - Stories - Sizing - Buffers - Scenarios

This is the method we use because it’s easy to explain, easy to align on, and hard to abuse.

Step 1: Pick one user and one “job”

Write this in plain language:

- Primary user: (who uses it most)

- Job-to-be-done: (what they’re trying to accomplish)

Example structure:“I’m building for [user] who needs to [job] so they can [outcome].”

If you can’t write that sentence, any estimate will be unstable.

Step 2: Define the core loop in one line

A loop is something the user can do more than once.

Use this format:Trigger - Action - Outcome - Reason to return

Keep it brutally simple.

If you’re unsure what “good” looks like for a first release, start with What a Good MVP Looks Like in 2026.

Step 3: Map the “first session” and the “second session”

Most MVPs are scoped around the first session (onboarding + first win).Retention depends on the second.

Write two short flows:

First session:

- how they arrive

- what they must do to reach first value

Second session:

- what makes them return

- what is faster than the first time

If your onboarding is complex, you’ll feel it right here. Use MVP Onboarding in 2026: Flows That Drive Activation as a sanity check.

Step 4: Convert the loop into “must-have” user stories

Now translate the flow into user stories.

A user story should sound like:“As a [user], I can [action], so that [outcome].”

Rules for MVP scope:

- Include only stories that are required to complete the loop end-to-end.

- If a story exists to “look professional” but doesn’t change the outcome, push it to later.

- If a story exists because you’re afraid users won’t understand, that’s usually a UX problem, not a feature problem.

If you want a quick filter for what truly belongs in first release, read MVP Scope and Focus in 2026.

Step 5: Add the “hidden work” stories (don’t skip this)

Founders underestimate because they don’t budget for the boring parts.

Add short stories for:

- QA on the critical path (signup → first value → repeat)

- Edge cases and empty states (no data, invalid data, timeouts)

- Basic analytics (activation + drop-off points)

- Basic admin / operations (even a simple internal panel or config)

- Deployment and releases (stores, hosting, environments)

This is also where many “cheap MVPs” become expensive later.If you’ve ever been burned by a rebuild, Why Cheap MVPs Fail in 2026 will feel familiar.

Step 6: Size each story with a simple bucket system

You don’t need perfect sizing. You need consistent sizing.

Use buckets:

- S (small)

- M (medium)

- L (large)

- XL (too big for MVP — split it)

Important: define what each bucket means for your team.

A practical way to do it:

- Ask your dev lead: “What is a typical S vs M vs L for us?”

- Use one example story you all agree on, then size others relative to it.

If you don’t have a dev lead yet, you can still use sizing to keep scope honest:

- Anything you can’t size confidently is probably under-defined.

- Anything that looks like XL should be broken down until it becomes M/L pieces.

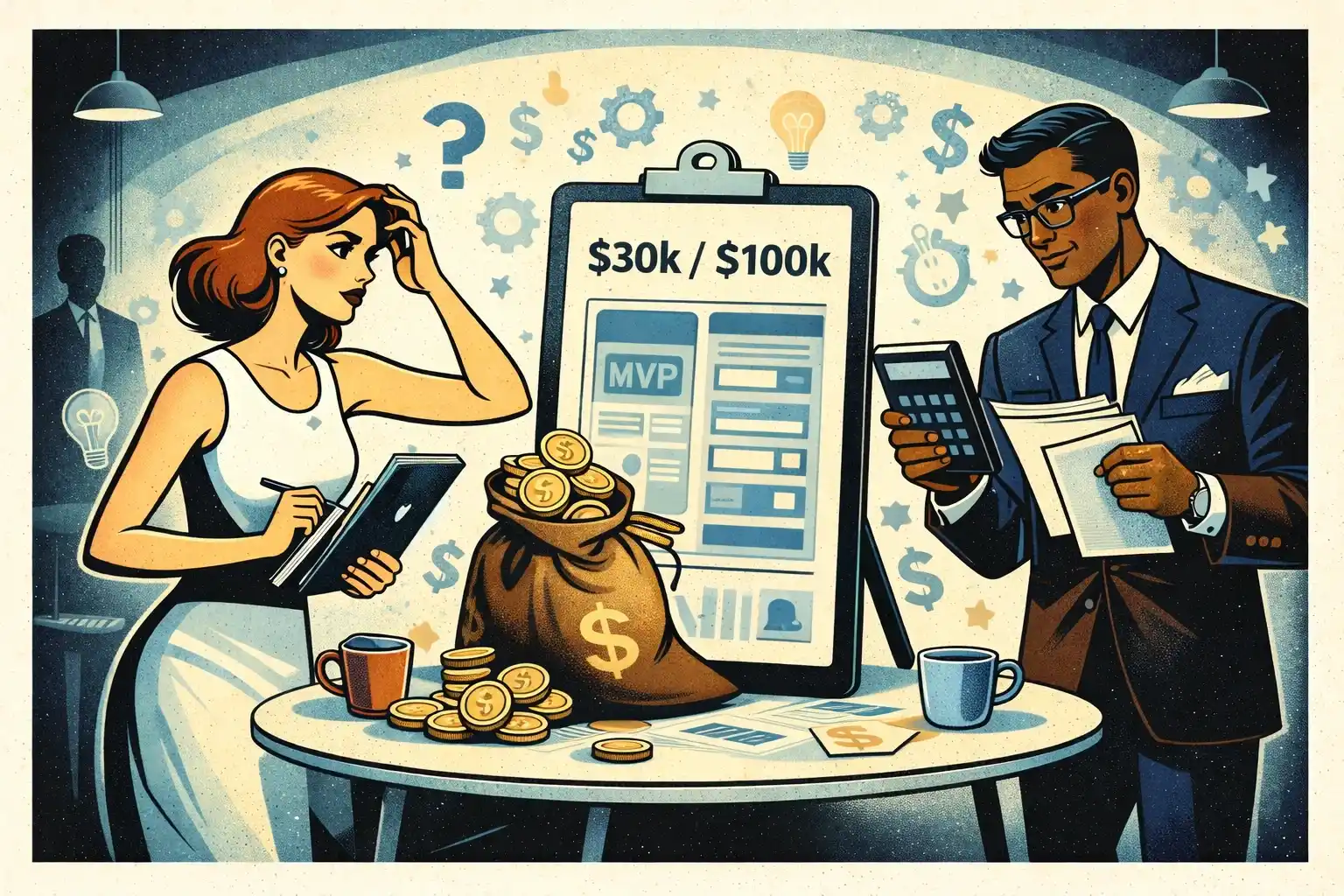

Step 7: Convert sizes into a range (minimum / likely / safe)

This is where founders usually demand a single number.Don’t do that.

Build three scenarios:

- Minimum (only must-haves, no nice-to-haves)

- Likely (must-haves + realistic edge cases + basic analytics)

- Safe (likely + buffer for unknowns)

Even if you don’t publish these numbers publicly, this structure stops scope arguments later.

If you want a realistic perspective on timing, compare your scenarios to How Long MVP Development Takes in 2026.

Step 8: Add a buffer — because unknowns are real

A buffer isn’t “padding.” It’s insurance against the parts you cannot see yet.

What typically creates unknowns:

- third-party integrations

- authentication and permissions

- payment flows

- data migration/import

- app store review friction

- performance issues from real usage

Rule of thumb (not a guarantee):If your scope touches external APIs, payments, or multiple roles, your safe estimate should include a meaningful buffer.

If you want a clearer view of where money disappears, see MVP Budget in 2026: Where Money Should Go.

How to keep the scope stable once you’ve estimated it

A good estimate can still fail if the scope keeps moving.

Use these guardrails:

1) Freeze the loop

The loop is your MVP contract with reality.If a change doesn’t improve the loop, it waits.

2) Limit “nice-to-haves” to a small lane

Don’t argue about them daily.Collect them, rank them, review once per week.

3) Apply a simple change rule

When someone adds a new story, one of these must happen:

- remove another story of similar size, or

- accept a timeline/budget increase, or

- move the new story to the next version

4) Validate before you build the expensive parts

If you’re unsure about demand for a feature, validate it first.A quick framework is in MVP Testing in 2026: What to Validate First.

The “scope estimate” you should be able to say out loud

If your estimate is good, you can explain it in 30 seconds:

“We’re building one core loop for one user. The first release includes X must-have stories to complete the loop, plus QA, analytics, and edge-case handling. We sized stories in buckets, built three scenarios, and added a buffer for integrations and unknowns.”

That’s a scope you can defend — to your team, to partners, and to yourself.

Thinking about building a startup MVP in 2026?

At Valtorian, we help founders design and launch modern web and mobile apps — including AI-powered workflows — with a focus on real user behavior, not demo-only prototypes.

Book a call with Diana

Let’s talk about your idea, scope, and fastest path to a usable MVP.

FAQ

How detailed should an MVP scope be before development starts?

Detailed enough that everyone agrees on the core user loop, the must-have stories, and what is explicitly out of scope. If you still have “big blanks,” you’ll pay for them later.

Can I estimate scope without a technical cofounder?

Yes. Start with the loop and user stories. Then bring a senior dev (or a small studio) to size stories and surface hidden work like QA, edge cases, analytics, and deployment.

What’s the fastest way to cut scope without ruining the product?

Cut breadth, not the loop. Keep the end-to-end outcome intact and remove optional branches, advanced settings, extra roles, and secondary workflows.

How do I prevent scope creep once development begins?

Use a change rule: any new story must replace something, move to later, or increase timeline/budget. Review nice-to-haves on a schedule, not in daily chats.

Should an MVP include analytics in 2026?

Yes — at least the basics. If you can’t see activation and drop-offs, you won’t know what to improve, and every decision becomes opinion-based.

How do I know if my estimate is realistic?

Check whether it includes non-feature work (QA, error handling, deployment, analytics), has scenario ranges (minimum/likely/safe), and accounts for integrations, multiple roles, or payments.

.webp)

.webp)

.webp)

.webp)

.webp)